Cited primarily from an article at

http://computer.getmash.net/;

Speech recognition has long languished in the no-man’s land between sci-fi fantasy (”Computer, engage warp drive!”) and everyday usage reality.

But that’s changing fast, as advances in computing power, artificial intelligence, powerful API's & newly available WSR Macros, make speech recognition the next powerful step for everyday use by "non-geek" users, user-interface design and now electronic voice-based security.

As to voice-based security: A whole host of highly advanced speech technologies, including emotion and lie detection, are moving from the lab to the marketplace.

This not a new technology,” says

Daniel Hong, an analyst at

Datamonitor who specializes in speech technology. “But it took a long time for Moore’s Law to make it viable.”

Mr. Hong estimates at the speech technology market is worth more than $2 billion, with plenty of growth in embedded and network apps.

And it’s about time. Speech recognition's technology has been around since the 1950s, but only recently have computer processors and accompanying artificial intelligence become powerful enough to handle the complex algorithms required to recognize our speech, both local & remote, improve our lives & productivity, and open our eyes to the long tail of speech recognition fields.

A few examples:

There are already several capable voice-controlled technologies on the market. You can issue spoken commands to devices like

Motorola’s Mobile TV DH01n, a mobile TV with navigation capabilities, and a host of telematics GPS devices. Microsoft recently announced a deal to slip voice-activation software into cars manufactured by Hyundai and Kia, and its TellMe division is investigating voice-recognition applications for the iPhone. And

Indesit, Europe’s second-largest home appliances manufacturer, just introduced the world’s first voice-controlled oven.

Yet as promising as this year’s crop of specch-controlled devices are, they’re just the beginning.

Speech technology comes in several flavors, including the speech recognition that drives voice-activated mobile devices; network systems that power IVR's using automated speech recognition, the unequalled desktop Vista Speech recognition, now with available macros {which we use to post & write articles) and the long-standing the standard in the Healthcare industry, the highly impressive network-based Philips SpeechMagic systems.

Voice biometrics (the true technical description of the often mis-used phrase "voice recognition") is a particularly hot area. Every individual has a unique voice print that is determined by the physical characteristics of his or her vocal tract. By analyzing speech samples for telltale acoustic features, voice biometrics can verify a speaker’s identity either in person or over the phone, without the specialized hardware required for fingerprint or retinal scanning.

The technology can also have unanticipated consequences. When the Australian social services agency Centrelink began using voice biometrics to authenticate users of its automated phone system, the software started to identify welfare fraudsters who were claiming multiple benefits — something a simple password system could never do.

The

Federal Financial Institutions Examination Council has issued guidance requiring stronger security than simple ID and password combinations, which is expected to drive widespread adoption of voice verification by U.S. financial institutions in coming years. Ameritrade, Volkswagen and European banking giant ABN AMRO all employ voice-authentication systems already.

Advanced voice-recognition systems that can tell if a speaker is agitated, anxious or lying are also in the pipeline.

Computer scientists (e.g. at Carnegie Mellon) have already developed software that can identify emotional states and even truthfulness by analyzing acoustic features like pitch and intensity, and lexical ones like the use of contractions and particular parts of speech. And they are honing their algorithms using the massive amounts of real-world speech data collected by call centers and free 411 speech-driven services such as the extremely popular Goog411.

A reliable, speech-based lie detector would be a boon to law enforcement and the military. But broader emotion detection could be useful as well. Our host company which developed the now-standard Law Enforcement "

Mobile Prosecutor" is presently experimenting with embedding it with voice-stress analysis.

In another example, a virtual call center agent that could sense a customer’s mounting frustration and route her to a live agent would save time, money and customer loyalty.

“It’s not quite ready, but it’s coming pretty soon,” says

James Larson, an independent speech application consultant who co-chairs the W3C Voice Browser Working Group.

Companies like

Autonomy eTalk claim to have functioning anger and frustration detection systems already, but experts are skeptical. According to

Julia Hirschberg, a computer scientist at Columbia University, “The systems in place are typically not ones that have been scientifically tested.”

According to Hirschberg, lab-grade systems are currently able to detect anger with accuracy rates in “the mid-70s to the low 80s.”

They are even better at detecting uncertainty, which could be helpful in automated training contexts. (Imagine a computer-based tutorial that was sufficiently savvy to drill you in areas you seemed unsure of.)

Lie detection via voice stress & syntax-pattern deviation analysis is a tougher nut to crack, but progress is being made.

In a study funded by the

National Science Foundation and the Department of Homeland Security, Hirschberg and several colleagues used software tools developed by SRI to scan statements that were known to be either true or false. Scanning for 250 different acoustic and lexical cues, “We were getting accuracy maybe around the mid- to upper-60s,” she says.

That may not sound so hot, but it’s a lot better than the commercial speech-based lie detection systems currently on the market. According to independent researchers, such “voice stress analysis” systems are no more reliable than a coin-toss.

It may be awhile before industrial-strength emotion and lie detection come to a call center near you. But make no mistake: They are just around the proverbial corner. And they will be preceded by a mounting tide of gadgets that you can talk to, argue with and intelligently discuss topics with.

Don’t be surprised if, some day soon, your Bluetooth headset tells you to calm down. Or informs you that your last caller was lying through his teeth.

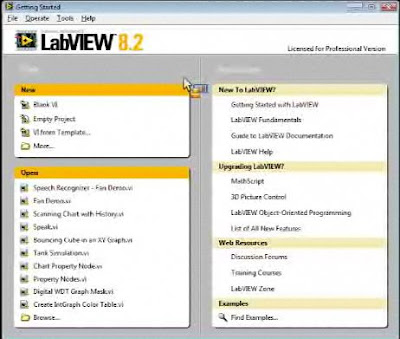

Now that Windows Speech Recognition Macros for Windows Vista™ are in feverish development, both in-house (Microsoft Speech Components Group [

listen_+at+_microsoft.com ], and the beta group inside the

Microsoft Speech Yahoo Technical Group) - desktop speech recognition is advancing daily by leaps and bounds, literally.

Powerful WSR macros that can, for example:

Open e-mail messages from a specific (non-Inbox) account with TO: / CC: / BCC: and Subject: fields already completed;

Macros that can move large blocks of extant text in and out of specific locations inside different applications;

Navigate & move items in and out of various folders inside Vista Explorer;

Spoken database lookups

are already evolving and being used & improved daily. It will not be long before speech recognition becomes "what we just use" for most of our daily work & living activities..

(A detailed post on the powerful new WSR Macro Tool & evolving macros is coming soon; We're gathering data, useful macros and research to be sure it is both interesting & useful to all types of speech recognition users)

Labels: artificial intelligence, automated speech recognition, Columbia University, Daniel Hong, Datamonitor, Macros, remote speech recognition, speech recognition, Voice biometrics, Windows Vista